Introduction

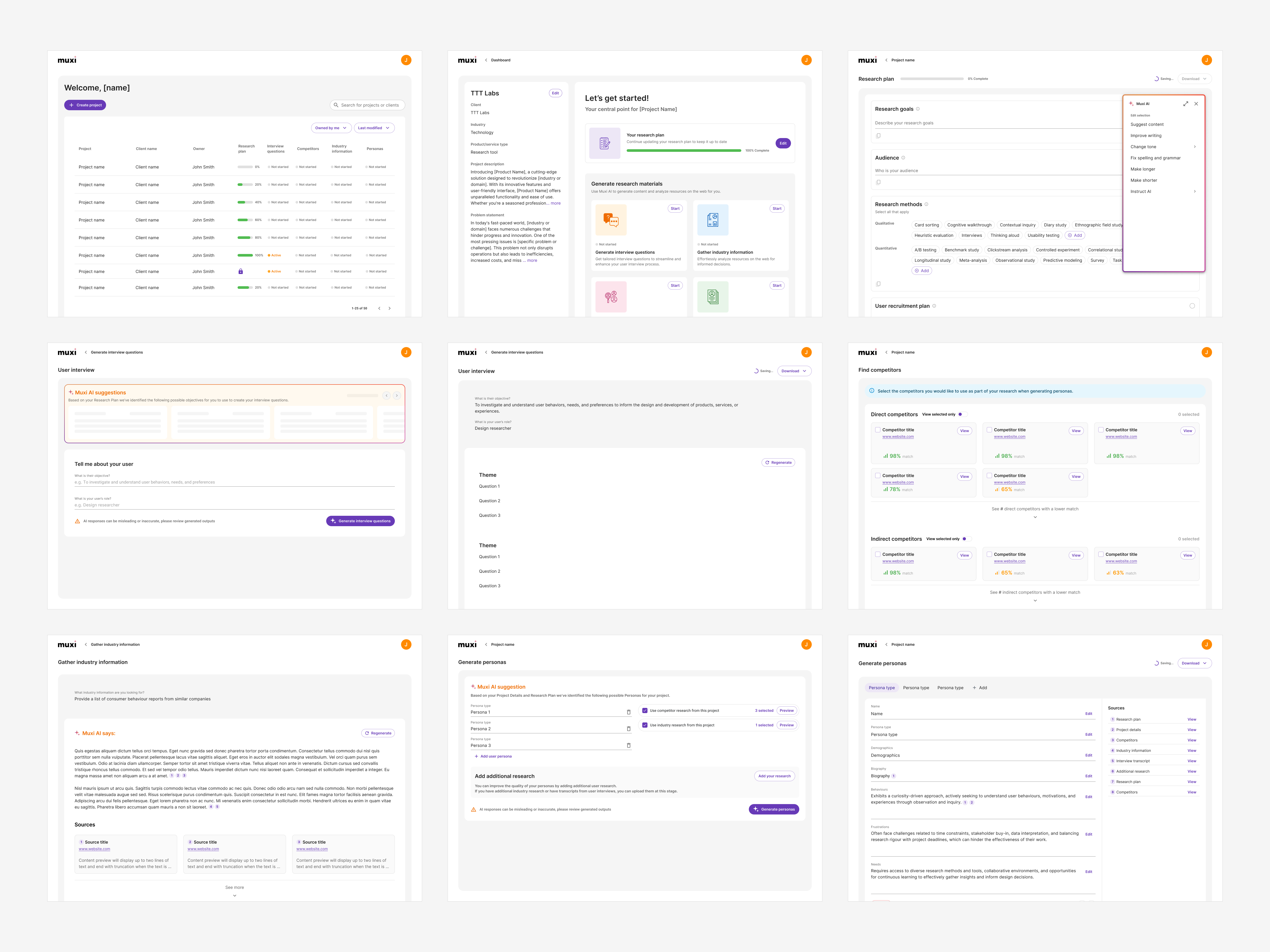

Muxi, our first in-house AI-powered product, transforms the research process by reducing weeks of work to minutes. Our tool streamlines and simplifies lengthy research workflows, dramatically cutting the time needed to conduct research and build personas from 2-3 days to just minutes. Developed with a commercial vision, Muxi aims to create a valuable tool that researchers would be willing to pay for, ensuring the product solves real problems while providing enough value to justify a subscription.

Key achievements

- Designed my very first AI tool

- Achieved success in reducing the amount of time it takes to conduct research from days to minutes

Research

Uncovering user needs and AI integration challenges

With the rest of the team onboard with this idea, a team was put together to build a POC, testing our capabilities with AI. I was brought on after to design and expand on the rest of the features that make it a MVP.

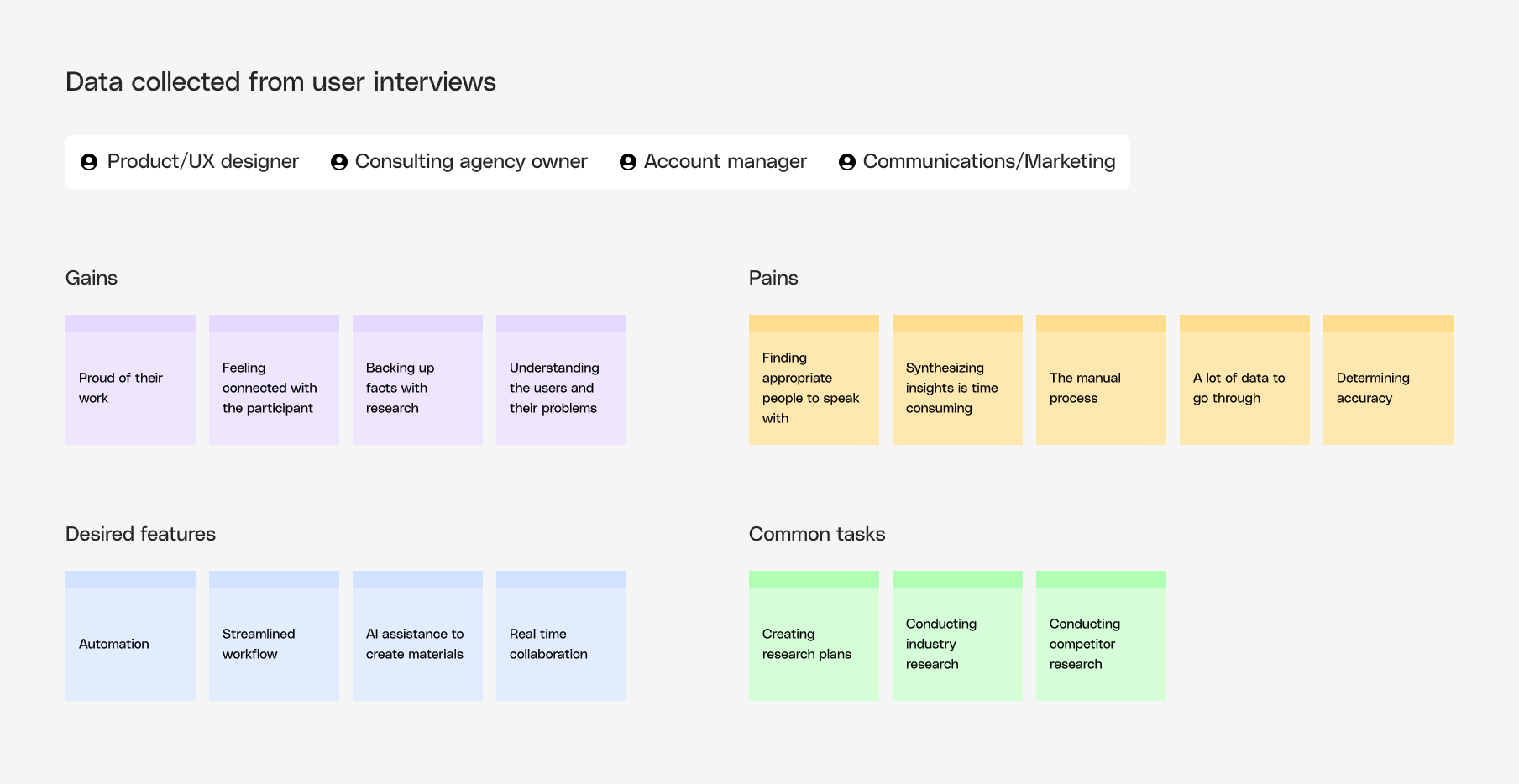

I conducted user interviews to understand how other researchers’ felt about their research process, their pain points and what would help improve that. I discovered that those who conduct research, have a strong sense of accountability and proudness towards the outcomes of that research. They particularly enjoyed connecting with the people they spoke to.

Bringing back to the team what I learned, we decided that even though AI was an integral part of the product, we didn’t want to take the humanizing aspect away. We wanted the users to still have control over what they do and the results that come from it.

Iterations

Designing AI-assisted features for enhanced user experience

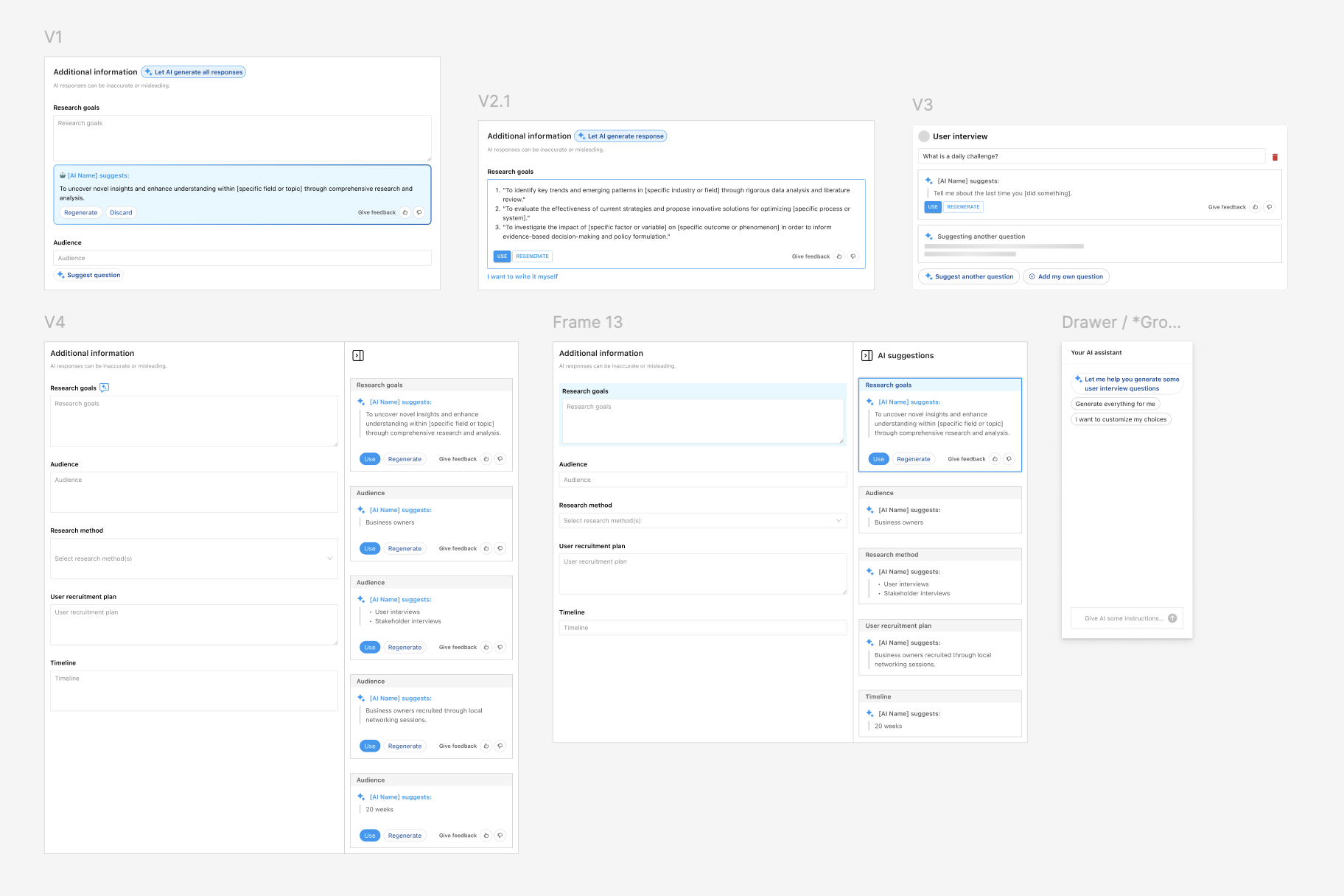

Building off of the POC and information architecture, I focused on figuring out the main core feature of the product which was the AI assistance portion. Some of the considerations were:

- how to incorporate it into each feature

- how to display it without being too forward facing

- figuring out the logic for triggering AI

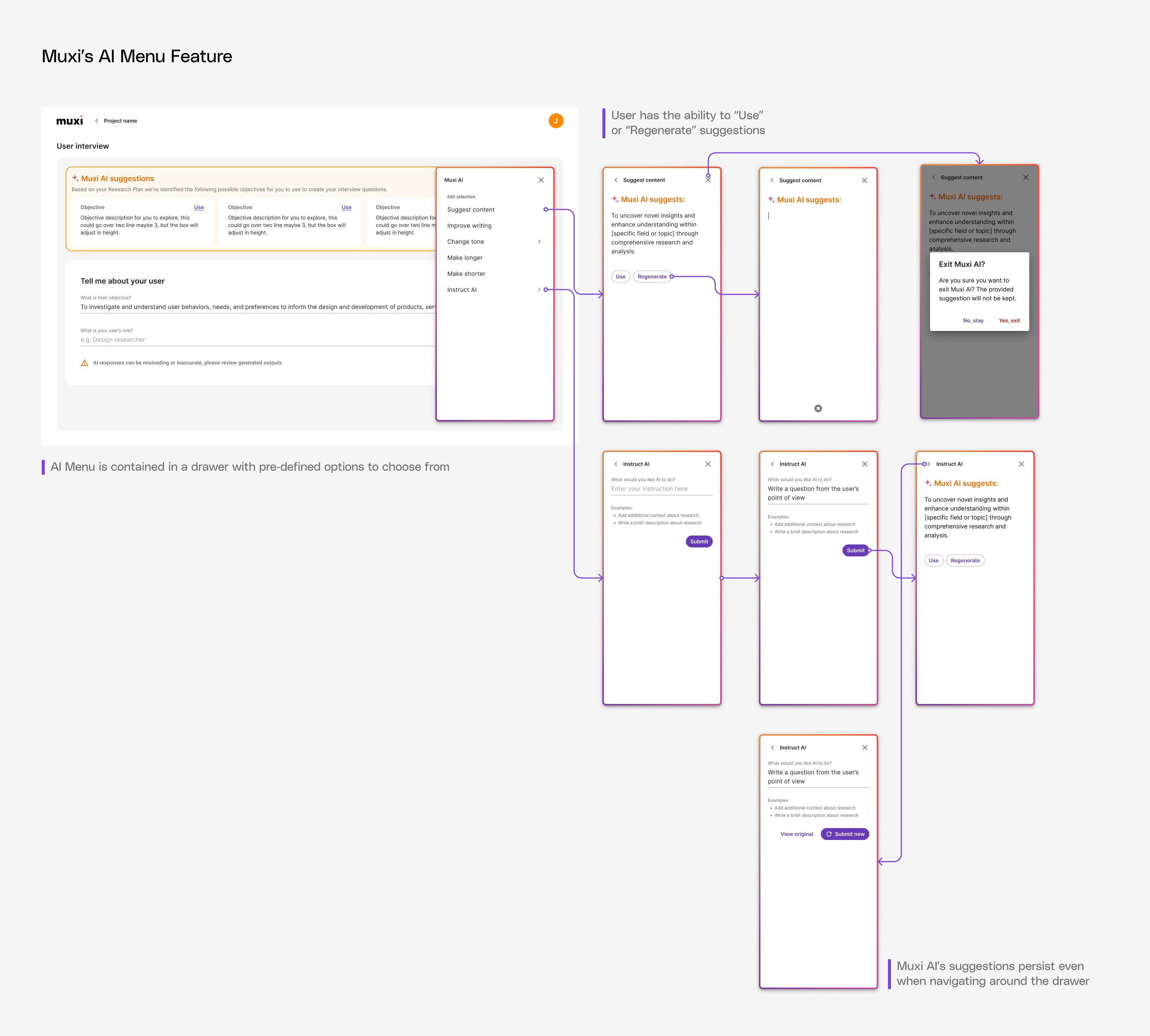

I went through different variations, researching other tools that use AI and working closely with the engineers, in the end I decided that it was best to be a button that is triggered upon hovering. The actions that follow suit would be shown in a drawer so that all the actions around AI would be kept to one place.

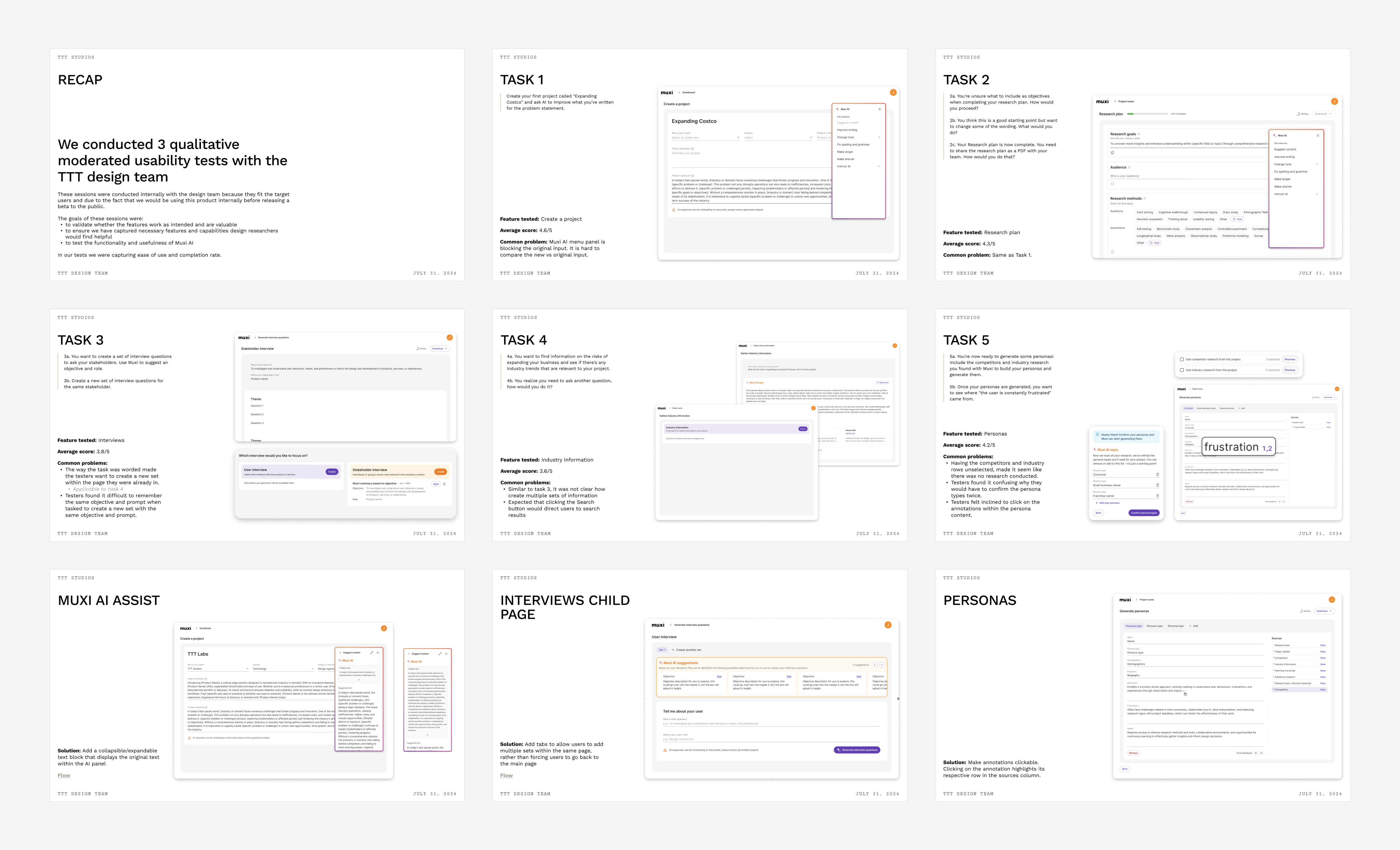

I conducted user testing with the internal design team to validate our assumptions before beta release, as they would be our primary initial users. We aimed to verify that the features worked as intended, provided value to researchers, and demonstrated effective AI capabilities. Our testing focused on three key areas: overall navigation, AI assist functionality, and persona generation. The feedback was largely positive and validated our approach. For future testing sessions, I would provide more contextual information to help users better understand each task.

Outcomes

Positive internal reception and time-saving impact

After considering each section where AI would be used, I decided it would be presented in a drawer. To maintain user control, rather than displaying the AI button prominently, I designed it to appear on hover in specific areas. When triggered, the drawer opens to present users with pre-defined options.

With this tool, the amount of time it took to conduct research and to build personas off of that research, was reduced from 2-3 days to a matter of minutes.

The MVP was shared within the company allowing everyone to try out the tool (and report back on any bugs they encounter). Next, we plan to refine the product before releasing a beta version to the public.

To test out the accuracy further, I compared the results the tool generated with personas defined in past projects and found that results were 80-90% ideal.

Reflection

Navigating the challenges of AI-driver design

Designing my first AI product involved significant trial and error. I learned that AI logic needs to be highly specific to achieve desired results. Determining the best way to display AI assistance required extensive experimentation, including button design and output presentation. It wasn't just a simple "click for AI results" process; we had to consider each step: how the AI communicates answers, how to return control to the user (approving, requesting new answers, or discarding), what pre-defined options to offer, and the nuances of user interactions.

As the plan is to market this product, our ongoing discussions about its future led to ad hoc changes, causing inconsistencies in design and logic. In hindsight, I'd prefer a more organized approach with comprehensive documentation from the start, tracking logic, change requests, and future features.